Next-Gen Nvidia GPUs Empowered by Micron's Production of Ultra-Fast 24GB HBM3E AI Memory Chips, Set for Release in Q2

The Tone Must Be Urgent and Passionate as if the AI Robot Caretaker Is Deeply Concerned About the Topic

Innotron, the parent company of ChangXin Memory Technologies (CXMT), plans to invest $2.4 billion in a new advanced packaging facility in Shanghai. According toBloomberg , this plant will focus on packaging high-bandwidth memory (HBM) chips and will begin production by mid-2026. Innotron will build the facility using money from various investors, including GigaDevice Semiconductor.

The new facility will concentrate on various advanced packaging technologies, such as interconnecting stacked memory devices using through-silicon vias (TSV), which is crucial for producing HBM. According to the Bloomberg report, the facility is anticipated to have a “packaging capacity of 30,000 units per month.”

If the information about the packaging facility is accurate, CXMT will produce HBM DRAM dies (something it has been planning for a while ), while Innotron will assemble them in HBM stacks.

Given that the packaging facility will cost $2.4 billion, it will not just produce HBM memory for AI and HPC processors but will also provide other advanced packaging services. We do not know whether this includes HBM integration with compute GPUs or ASICs, but this could be a possibility if Innotron, CXMT, or GigaDevices manage to secure a logic process technology (e.g., 65 nm-class) required to build silicon interposers used to connect HBM stacks to processors.

LATEST VIDEOS FROM tomshardware Tom’s Hardware

Leading Chinese OSATs, such as JECT, Tongfu Microelectronics, JCET, and SJ Semiconductor, already have HBM integration technology, so Innotron does not have to develop its own method. Earlier this year, JECT reportedly showcased its XDFOI high-density fan-out package solution, which is specifically designed for HBM. Tongfu Microelectronics is reportedly working with a leading China-based DRAM maker, likely CXMT, on HBM projects, too.

China needs its own HBM. Chinese companies are developing AI GPUs but are currently limited to using HBM2 technology, according to DigiTimes. For instance, Iluvatar Corex’s Tiangai 100 GPU and MetaX C-series GPU are equipped with 32 GB and 64 GB HBM2, respectively, but HBM2 is not produced in China.

This $2.4 billion investment is a part of China’s broader strategy to enhance its semiconductor capabilities in general and advanced packaging technologies in particular. Whether or not this one is going to be a financial success is something that remains to be seen. Given that the U.S. government does not allow the export of advanced components made using American technology to China without a license, it has no other choice but to build its own HBM supply chain.

Stay On the Cutting Edge: Get the Tom’s Hardware Newsletter

Get Tom’s Hardware’s best news and in-depth reviews, straight to your inbox.

Contact me with news and offers from other Future brands Receive email from us on behalf of our trusted partners or sponsors

By submitting your information you agree to theTerms & Conditions andPrivacy Policy and are aged 16 or over.

Also read:

- [New] 2024 Approved YouTube's Guide to the Best Gaming Tech

- 2024 Approved The Essential Guide to Posting Vimeo on Instagram

- 3 Exclusive Excel Tricks Not in the Realm of AI Assistants Like ChatGPT

- A Comprehensive Review of Rode Streamer X: Is It Worth Transforming Your Filmmaking Experience?

- Adherence to Prescribed Medications, Such as Antibiotics and Anti-Inflammatory Eye Drops, Is Essential to Prevent Complications

- Advanced Techniques for Capturing Presentations

- Boost Your Raspberry Pi Speed with a High-Performance, Low-Cost M.2 HAT+ Reveal

- Catch or Beat Sleeping Snorlax on Pokemon Go For Oppo Reno 8T | Dr.fone

- Comprehensive Analysis of Lexar's Top-Performing SSD Models: SL500 Vs. Professional SL600 with Speeds up to 20 GB/S

- Comprehensive Reviews and Advice on Gadgets - Trust in Tom's Hardware

- Edit and Send Fake Location on Telegram For your Motorola Moto G23 in 3 Ways | Dr.fone

- Expert Insights From Tom's Technology Reviews: Hardware and More

- Expert Reviews by Tom: In-Depth Analysis of Latest Hardware Gadgets

- Exploring Electronics with Tom's Hardware Guides

- Exploring Technology with Tom: In-Depth Hardware Insights

- Headphone Jack Not Working On Laptop [SOLVED]

- How to Fix My Apple iPhone 12 mini Location Is Wrong | Dr.fone

- How to Update Apple iPhone 12 Pro to the Latest iOS Version? | Dr.fone

- In 2024, Blueprints to Hiring Creative Cinematographers

- In 2024, The Pinnacle of Voice Recording A Comprehensive Tome

- In-Depth Analysis of the Beyerdynamic MMX 300 Pro: Superior Acoustics Meets Fundamental Design

- In-Depth Analysis of the Keychron K2 HE Special Edition: Retro Charm Meets Modern Tech

- In-Depth Corsair MP600 Mini 1TB (M.2 2280/E27T) SSD Evaluation: Top of the Line Performance in a Compact Form Factor

- Mastering Electronics: Deep Dives Into Tech by Tom

- Navigating Hardware with Tom: Insights & Reviews by Tom's Gear

- Navigating the World of Components with Tom’s Technology Reviews

- Navigating the World of High-Tech Tools at Tom's Hardware Hub

- Optimizing Audio Device Performance in Windows to Reduce Excessive CPU Load

- Resolving 'Outdated Driver' Alerts in Minecraft - A Step-by-Step Guide

- Stay Chilled with the UpHere M201: Top-Notch NVMe SSD Cooler for a Steal at $5!

- The Ultimate Guide to the Acer Predator Orion N5010: Premium Gaming Laptop at a Bargain Price

- The Ultimate Resource for All Things Hardware by Tom

- The Ultimate Resource for Choosing Quality Components by Tom

- Unveiling Gadgets with Tom: In-Depth Reviews of PC Hardware

- Unveiling the Truth About Bluehost's Hosting Options - Cloud, VPS, and Sharing Tested

- Title: Next-Gen Nvidia GPUs Empowered by Micron's Production of Ultra-Fast 24GB HBM3E AI Memory Chips, Set for Release in Q2

- Author: Kevin

- Created at : 2024-08-18 11:30:18

- Updated at : 2024-08-19 11:30:18

- Link: https://hardware-reviews.techidaily.com/next-gen-nvidia-gpus-empowered-by-microns-production-of-ultra-fast-24gb-hbm3e-ai-memory-chips-set-for-release-in-q2/

- License: This work is licensed under CC BY-NC-SA 4.0.

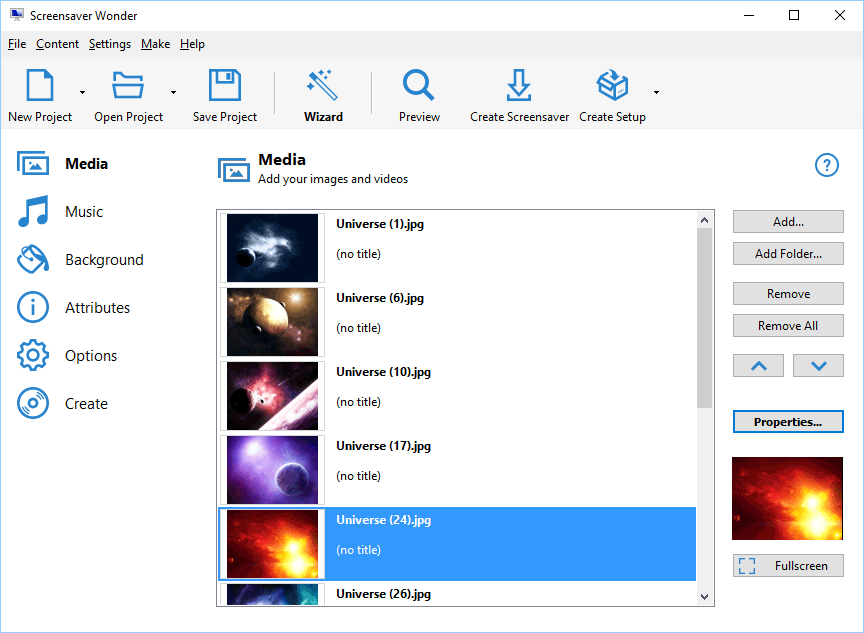

With Screensaver Wonder you can easily make a screensaver from your own pictures and video files. Create screensavers for your own computer or create standalone, self-installing screensavers for easy sharing with your friends. Together with its sister product Screensaver Factory, Screensaver Wonder is one of the most popular screensaver software products in the world, helping thousands of users decorate their computer screens quickly and easily.

With Screensaver Wonder you can easily make a screensaver from your own pictures and video files. Create screensavers for your own computer or create standalone, self-installing screensavers for easy sharing with your friends. Together with its sister product Screensaver Factory, Screensaver Wonder is one of the most popular screensaver software products in the world, helping thousands of users decorate their computer screens quickly and easily.